I am a Ph.D. student in the college of Computer Science and Technology at Zhejiang University, advised by Prof. Yang Yang. I also obtained a Bachelor’s degree in Software Engineering from Zhejiang University.

My research primarily focuses on graph data mining and large-scale graph neural networks.

I have published several papers

Currently, I am researching the synergistic interaction between large language models and graph data, applying it to complex real-world scenarios. I am also seeking job opportunities in AI startups. If you’re interested in my work or know of any suitable positions, please feel free to contact me.

🔥 News

- 2025.01: 🎉🎉 Our paper KAA is accepted by ICLR’25.

🎖 Honors and Awards

- AAAI 2023 Distinguished Paper Award (Rank 1st)

- WAIC 2023 Youth Outstanding Paper Nomination Award (Youngest Ever Winner)

- Chinese Institute of Electronics 2024 Outstanding Ph.D. Award

- AI Time 2023 Top 10 Academic Presentations of the Year

📝 Publications

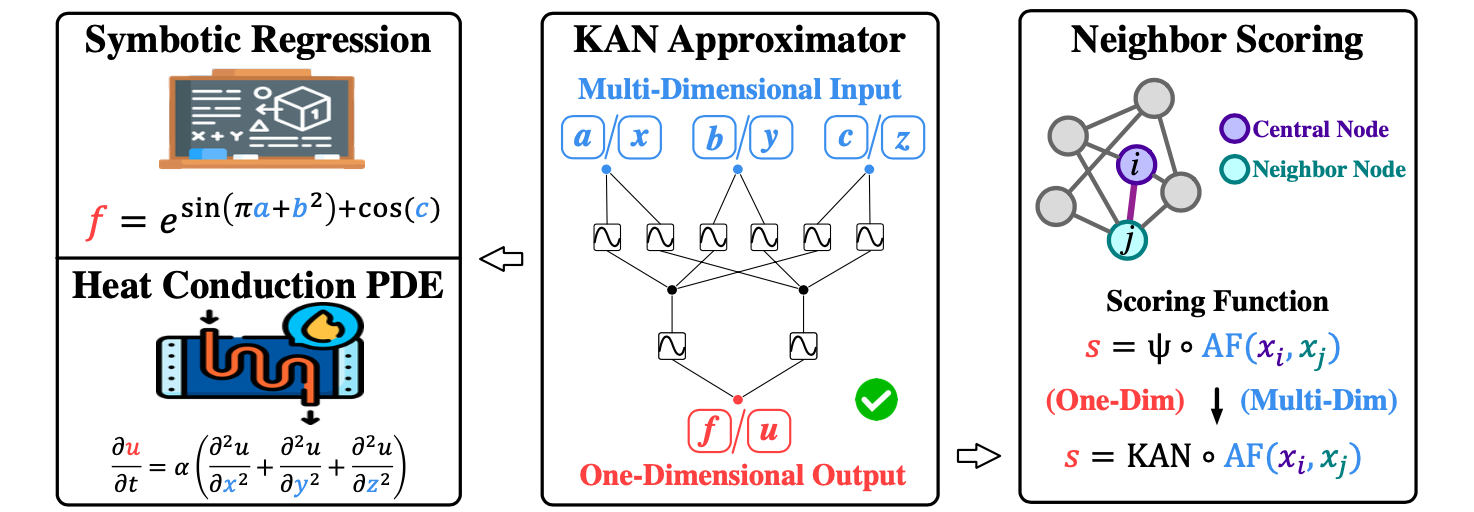

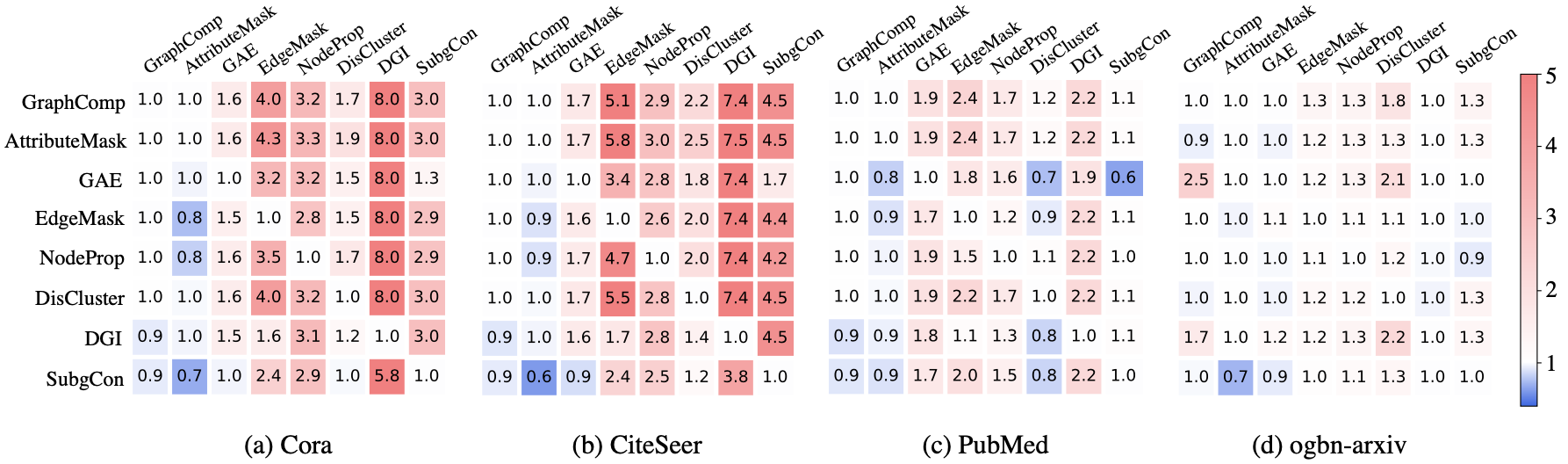

KAA: Kolmogorov-Arnold Attention for Enhancing Attentive Graph Neural Networks [Paper, Code]

Taoran Fang, Tianhong Gao, Chunping Wang, Yihao Shang, Wei Chow, Lei Chen, Yang Yang

We unify the scoring functions of existing attentive GNNs and compare the theoretical expressive ability between KAN-based attention and MLP-based attention.

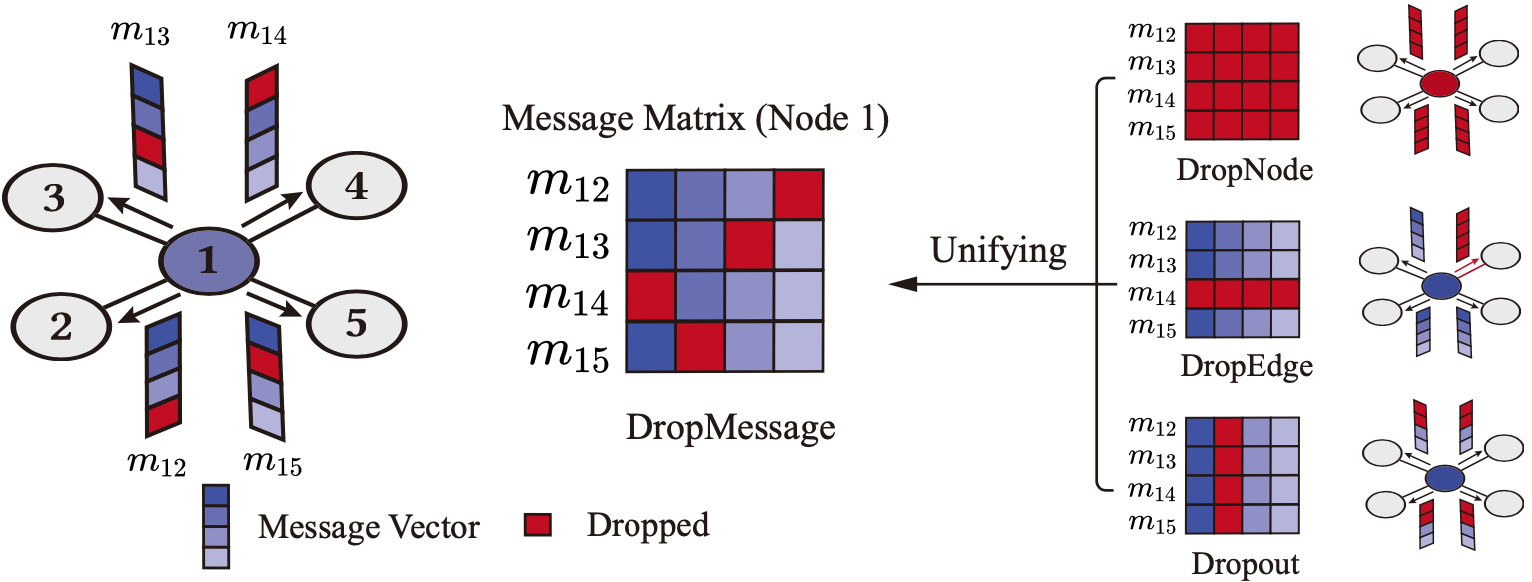

Dropmessage: Unifying Random Dropping for Graph Neural Networks [Paper, Code]

Taoran Fang, Zhiqing Xiao, Chunping Wang, Jiarong Xu, Xuan Yang, Yang Yang

Our proposed DropMessage unifies random dropping methods on graphs, and it alleviates over-smoothing, over-fitting and non-robustness issues on GNN models.

📖 Educations

- 2021.09 - now, Ph.D. in Computer Science and Technology, Zhejiang University, Hangzhou, Zhejiang.

- 2017.09 - 2021.06, Bachelor in Software Engineering, Zhejiang University, Hangzhou, Zhejiang.

- 2014.09 - 2017.06, Hangzhou No.2 High School, Zhejiang University, Hangzhou, Zhejiang.

💬 Invited Talks

- 2023.02, Invited talk for the distinguished paper. [Video]

- 2023.10, Report on graph pre-training. [Video]

- 2024.6, Report on GraphTCM. [Video]

💻 Internships

- 2020.03 - 2020.11, Designing and developing Volcano Engine, Bytedance.